The EU AI Act – A Play to Transform Global AI Regulation

In December 2023, the European Parliament passed the World’s first comprehensive AI law, a regulatory regime that has the potential to shape the development and implementation of AI systems in coming decades, both in the EU and across the globe.

The EU says that the regulations are designed to ensure more accountability and transparency for AI applications deployed in the EU.

“For example, it is often not possible to find out why an AI system has made a decision or prediction and taken a particular action. So, it may become difficult to assess whether someone has been unfairly disadvantaged, such as in a hiring decision or in an application for a public benefit scheme.”

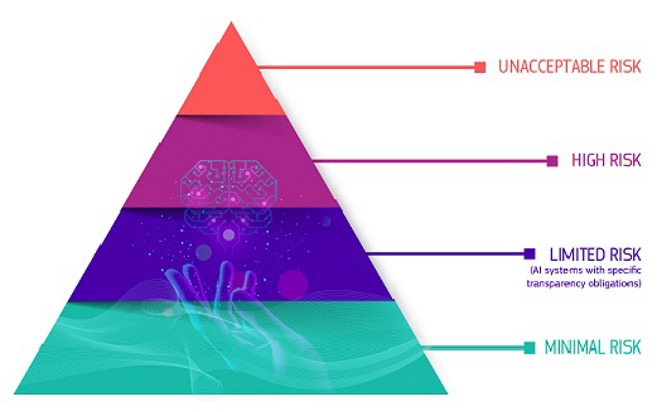

A Risk Based Approach

Using a risk-based approach to regulation, the AI Act will define AI systems based on their usage, with systems being categorised as Minimal, Limited, High or Unacceptable Risk.

AI systems that fall into the Unacceptable Risk category will be banned outright, such as live facial recognition scanning, and AI driven Social Credit Systems.

Despite the ban on live facial recognition scanning, there are specific exceptions for law enforcement in the event of an imminent terrorist threat or child kidnapping.

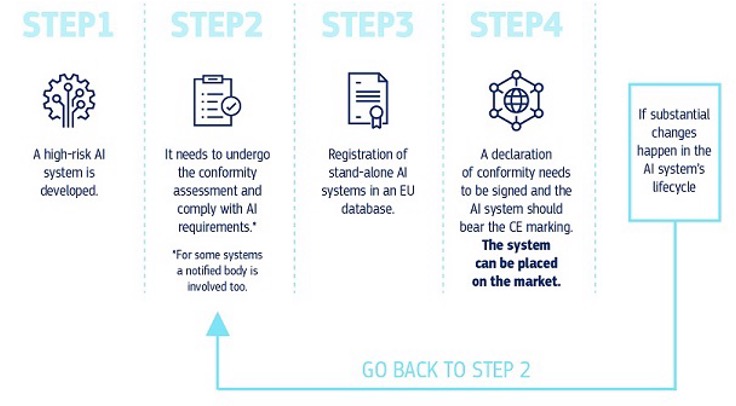

High Risk classified systems will be subject to a strict regulatory regime before they can be marketed in the EU.

The EU says that the High Risk category will be applied to a range of systems, in areas such as critical infrastructure (such as transport or energy), education, safety, HR, public services, credit ratings, migration, border control, elections, government and the courts.

AI Systems falling into these categories will be required to adhere to a higher standard of regulatory oversight and to ensure the implementation of:

· Risk assessment and mitigation systems

· High quality datasets that reduce risk of errors or discrimination.

· Activity Logging

· Clear documentation of system operations

· Clear and adequate guidance to the deployer of the AI

· Human oversight

· Robust, secure and accurate operations

The following diagram from the EU shows the compliance process for High Risk applications:

Limited Regulations for Limited Risks

Limited Risk applications, such as chatbots and generative AI systems will have a lower threshold for regulation, with the regime requiring the transparent operation and disclosure to end users of AI systems as well as well as disclosure of any AI-generated content. Deepfake content and AI generated content on matters of public interest is required to be disclosed under this regime.

Minimal risk categories such as spam filters and AI based video games will not be subject to regulatory restrictions under the Act.

How to Comply?

The AI Act will take some years to come into effect, with individual EU countries setting up AI regulatory bodies to implement the provisions of the act, in a similar manner to the setup of the data protection / GDPR regulators.

For businesses planning on using or implementing AI systems in the EU, it is important to start planning for compliance now, by taking key steps to ensure:

· Review and comply with the EU’s AI Pact, a voluntary industry code that encourages AI providers to adhere to the AI Act in advance of its formal implementation.

· That the use of the AI is transparently disclosed to your employees, customers, clients and other key stakeholders.

· Clear documentation is available that can explain the workings of or decision-making structures in any AI system you implement.

· That your systems have appropriate logging in place to be able to identify and deal with issues when they arise.

· Prepare policies and procedures that your organisation can adhere to when using the AI system.

· Human oversight of AI systems.

· Escalation and dispute resolution processes to deal with issues as they arise.

· Risk assessments are conducted prior to implementation to determine the risk category and ensure that the system is compliant with key guidelines for that category.

· When dealing with High Risk AI systems, ensure you have clear legal and technical advice to inform your implementation strategy and clear alignment with your Data Protection policies.

Implementation

The AI Act was first agreed in the European Parliament and Council of the EU in December 2023 and its provisions will come into force over the coming 24-36 months.

Like the GDPR has had a global impact on the development of data protection regimes, it can be expected that the AI Act will have be influential on policy makers and the the development of AI systems not just in Europe but throughout the world.

Ensuring that AI systems adhere to the AI Act is likely to become best practice for developers to ensure that their systems are being developed and implanted in a trustworthy, credible and socially responsible manner which will allow for seamless access to EU markets and countries that follow the EU lead.

For more see: Shaping Europe’s digital future: The AI Act